CAPO: Control-Aware Prediction Objectives for Autonomous Driving.

Jun 1, 2022·,,,,,·

0 min read

Rowan McAllister

Blake Wulfe

Jean Mercat

Logan Ellis

Sergey Levine

Adrien Gaidon

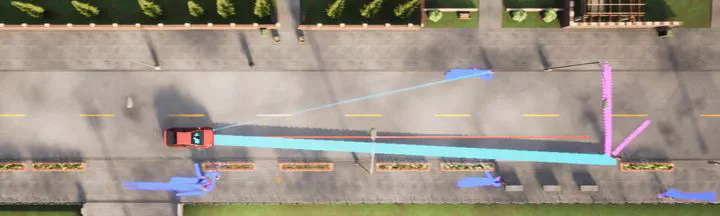

Consider an autonomous vehicle planning to drive ahead along the arrow. In this example, the biker has 95% chance to turn right and 5% chance to cross the road. Should 95% of the samples from a trajectory forecasting model point towards turning right? How to estimate the risk for the autonomous vehicle? How to capture the dangerous events?

Consider an autonomous vehicle planning to drive ahead along the arrow. In this example, the biker has 95% chance to turn right and 5% chance to cross the road. Should 95% of the samples from a trajectory forecasting model point towards turning right? How to estimate the risk for the autonomous vehicle? How to capture the dangerous events?Abstract

Autonomous vehicle software is typically structured as a modular pipeline of individual components (e.g., perception, prediction, and planning) to help separate concerns into interpretable sub-tasks. Even when end-to-end training is possible, each module has its own set of objectives used for safety assurance, sample efficiency, regularization, or interpretability. However, intermediate objectives do not always align with overall system performance. For example, optimizing the likelihood of a trajectory prediction module might focus more on easy-to-predict agents than safety-critical or rare behaviors (e.g., jaywalking). In this paper, we present control-aware prediction objectives (CAPOs), to evaluate the downstream effect of predictions on control without requiring the planner be differentiable. We propose two types of importance weights that weight the predictive likelihood: one using an attention model between agents, and another based on control variation when exchanging predicted trajectories for ground truth trajectories. Experimentally, we show our objectives improve overall system performance in suburban driving scenarios using the CARLA simulator.

Publication

In International Conference on Robotics and Automation.