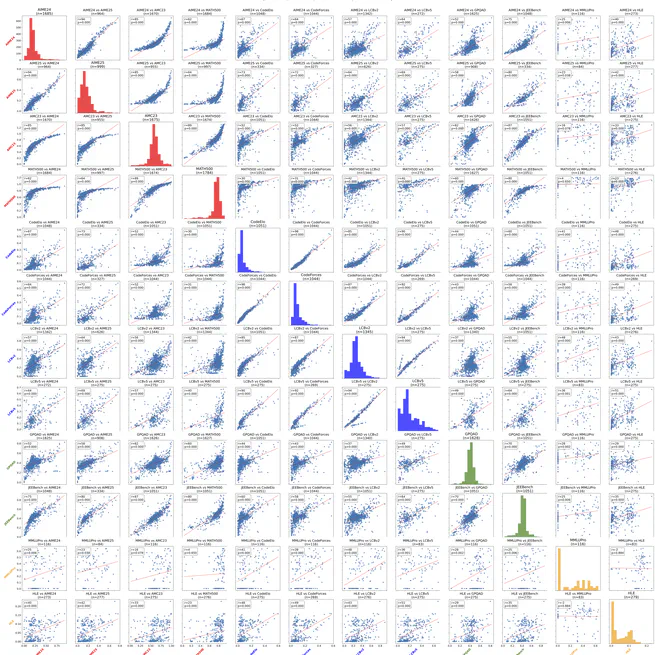

OpenThoughts3: Reasoning LLM Evaluation Meta-Analysis

Meta-analysis of reasoning LLM evaluation, benchmarks, and experimental data from the OpenThoughts3 project.

Jun 4, 2025

OpenThoughts: Data Recipes for Reasoning Models

Jun 4, 2025

Mamba-7B

Mamba-7B This is a 7B parameter model with the Mamba architecture, trained on multiple epochs (1.2T tokens) of the RefinedWeb dataset. Mamba is a state-space model that does not use self-attention unlike the standard transformer architecture.

Jun 28, 2024

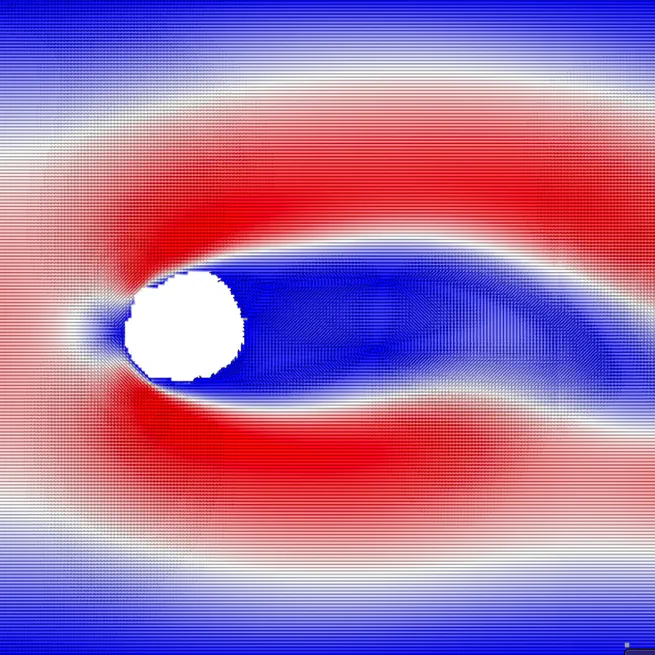

Two dimensional lattice boltzmann fluid simulation

A C++ implementation of the lattice boltzmann D2Q9 fluid simulation with immersed boundaries.

Oct 25, 2017

Some WebGL experiments

WebGL Demo WebGL Demo

Jan 1, 0001